What is Ampere Architecture?

Nvidia has changed modern graphics cards with some innovations like CUDA cores, Tensor Cores, and Ampere Architecture assisted by AI to solve complex problems and issues. It seems that the main purpose of Nvidia is to provide the fastest graphics card. They have come up with the best graphics card that is capable of performing in-game graphic processing.

What is Nvidia Ampere Architecture?

Tensor Cores:

Nvidia first introduced Tensor Cores that came with AI to speed up the processing automatically. It was the era of Nvidia Volta architecture. NVIDIA DGX-1 was the first GPU with a deep learning ability however the first Tensor core Nvidia GPU was the Tesla V100 which came with 32GB of memory size, the highest at that time. Now the expensive gups from PNY and other companies come with 48GB RAM size which is too much for gaming.

The tensor mechanism is based upon FP64 (Floating Point) and TF32 (Tensor Float 32) to make AI more efficient and professional, it will help to extend the power of Tensor cores to HPC (High-Performance Computing), making it really unique and fast.

It is possible to deliver speedups of up to 20X for artificial intelligence without requiring any code change, TF32 works like FP32 which makes it elegant. Tensor Cores, a brand new feature on NVIDIA Pascal GPU architectures, makes it possible to perform FP64 matrix operations on an extremely wide range of applications.

Tensor Cores deliver up to 2X performance compared to previous generation GPUs for a variety of applications including deep learning and computer vision. To make it easier to use Tensor Cores, NVIDIA has designed a new instruction set called Automatic Mixed Precision (AutoMixedPrecision) that enables the hardware to automatically choose between single-precision (FP32), half-precision (bfloat16), and floating-point 16-bit (FP16) arithmetic operations to accelerate the application.

AI and HPC Assistance:

Artificial intelligence (AI) and high-performance computing (HPC) have revolutionized the way we solve big problems. These technologies are changing the world around us and are bringing many benefits. As AI and HPC technology continue to evolve, they will become even more important. They will enable us to make better decisions and improve our lives.

AI and HPC are used for several purposes including developing self-driving cars, solving problems, and understanding how things work in the world. Scientists have created artificial intelligence machines that can think like humans. AI has been developed to understand how computers work and to develop them. The way it works is by training AI to learn things. AI can also be trained to look for patterns and analyze data.

Nvidia Transistors:

The NVIDIA Ampere architecture is the largest 7 nanometers chip ever built and features six key innovative innovations, the company has crafted almost 54 billion transistors, making it one of the leading companies in Graphics cards, moreover, they are making splendid GPUs that are equally efficient for programming, animation, video rendering, mining, and gaming.

Third-Generation NVLink:

If you want to move large amounts of data across multiple GPUs, scaling applications to use multiple GPUs requires you to move your data at an extremely fast rate. The new generation of NVIDIA NVLink, introduced with the new NVIDIA Volta GPU architecture doubles the PCIe Gen4 bandwidth to 600 GB/sec. NVIDIA’s latest NVSwitch technology can deliver up to 25 gigabits per second of data transfer speeds, making it the fastest NVLink technology available.

Multi-Instance GPU (MIG):

Most AI applications can benefit from GPU acceleration. However, not every AI application needs the performance of a full GPU. Multi-instance GPU (MIG) is a feature supported on A100 and A30 GPUs that allows workloads to share the GPU. With MIG, each GPU can be partitioned into multiple GPU instances, fully isolated and secured at the hardware level with their own high-bandwidth memory, cache, and compute cores.

Now, developers can access breakthrough acceleration for all their applications, big and small, and get guaranteed quality of service. And IT administrators can offer right-sized GPU acceleration for optimal utilization and expand access to every user and application across both bare-metal and virtualized environments.

Structural Sparsity:

Artificial Intelligence networks have become very big and powerful with the number of connections being measured in the millions.

If all of these parameters are included in the model, accuracy will improve. This will allow us to use a larger number of features than necessary.

It’s possible to provide up to 2X higher performance for sparse models with the help of Tensor Cores. The sparsity feature can be utilized to improve the performance of model training.

Second-Generation RT Cores:

With the NVIDIA Ampere architecture, the second generation RT cores, and the NVIDIA Pascal GPU, the new Nvidia A40 delivers massive speedups for workloads like photorealistic rendering of movie content, architectural design evaluations, and virtual prototyping of product designs.

RT Cores speed up the rendering of ray-traced motion blur for faster results with higher visual accuracy. They simultaneously run ray tracing with either shading or denoising capabilities.

Smarter and Faster Memory:

There is no doubt about the performance of A100 that comes with the amazing ability of computation. If we talk about the speed, well, it comes with an amazing 2 TB/sec of memory bandwidth, which makes it a much faster GPU of this generation as compared to the previous version. It has 40MB level 2 cache on-chip memory that is7 times larger than the old one.

GPU Scaling:

You have heard it right, GPU scaling is often neglected by users when they buy expensive GPUs, when you are paying more, much be familiar with this, Nvidia deploys at the benchmark scale making it good for the cloud, data centers, networking, and security purposes.

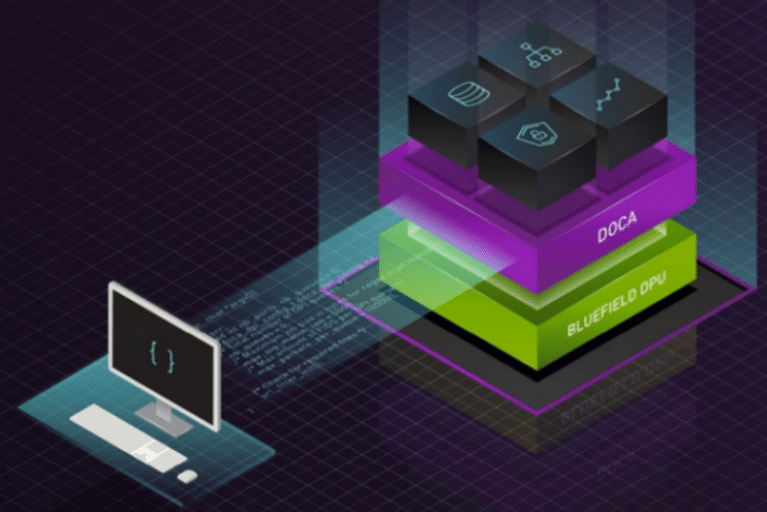

Unified Compute and Network Acceleration:

NVIDIA Ampere converged accelerators bring unprecedented performance for deep learning and data processing in edge computing, telecommunications, and network security. They work with NVIDIA’s Bluefield data processing unit (DPU).

Bluefield-2 combines the power of the NVIDIA ConnectX-6 Dx with programmable Arm cores, hardware offloads for software-defined storage, networking, security, and management.

NVIDIA’s hardware-based converged accelerators can deliver superior performance and increased energy efficiency. It can also help improve the data center’s ability to secure all computing infrastructure.

Power Optimized for Any Server:

The smallest footprint in the portfolio, the NVIDIA A2 is designed for inference and deployment in entry-level server environments constrained by space and thermal requirements, such as 5G edge and industrial environments. A2 is ideal for any server because it has a thermal design power (TDP) of 60W to 40W and is a low-profile form factor.

Secure Deployments:

Secure deployments are a big issue for companies. Companies need to be sure that their servers are secured from outside hackers. They need to make sure that any information stored on the server is safe. If they don’t, they could end up losing a lot of money. Many people believe that the server is the main place where a lot of sensitive information is kept.

Therefore, it is very important to make sure that the servers are secure. One of the ways to make sure that a server is secure is to make sure that it is protected from malware attacks. Malware can cause a lot of damage to a company. You may not even know that you have it until after it has already caused you a lot of trouble.

Density Optimized Design:

NVIDIA’s new NVIDIA A16 GPU comes in a quad-GPU board design that’s optimized for user density and, combined with NVIDIA Virtual PC (vPC) software, enables graphics-rich virtual PCs accessible from anywhere.

Deliver increased frame rate and lower end-user latency versus CPU-only VDI with NVIDIA A16, resulting in more responsive applications and a user experience that’s indistinguishable from a native PC. The NVIDIA A16 GPU also supports up to eight display outputs including four displays per GPU, making it easy to connect up to 16 displays to a single desktop.

![What is GPU Artifacting: How To Fix It? [In Detail]](https://gpuradar.com/wp-content/uploads/2022/10/gpu-artificating-768x448.jpg)